The Deepfake Problem; How a Senior Citizen Became the First Victim of India’s Deepfake Technology Scam

The boundaries between reality and deception have grown increasingly porous in an increasingly digital world. The advent of deepfake technology, which employs sophisticated algorithms to manipulate audio and video content, has ushered in a new era of digital deception. The case in point may be India's first deepfake con, which has raised crucial questions about this insidious technology's potential to reshape crimes.

In Kozhikode, Kerala, Radhakrishnan P S, a 72-year-old retiree from Coal India, became perhaps India’s first casualty of deepfake technology.

One morning, he experienced an unexpected and unsettling encounter that would shake him to the core; the unsuspecting senior citizen received a phone call shattering the tranquillity.

On the other end of the line was a voice that claimed to be an old colleague desperately in need of hospital funds; although touched by the apparent distress of his former coworker, Radhakrishnan sought reassurance that this was not a hoax call and, to his surprise, the caller agreed to a video chat.

During this video call, which has now become the center of attention, the alleged colleague kept their face intentionally blurry, making it challenging for Radhakrishnan to discern their identity; however, the voice, eerily reminiscent of his colleague’s, convinced him.

In a moment of compassion, Radhakrishnan transferred INR 40,000 to the provided Google Pay number via WhatsApp.

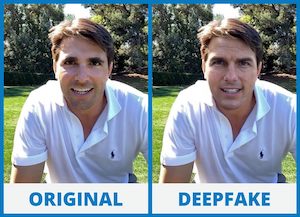

Little did he know that this voice and video were skillfully manipulated deepfakes, a term used to describe media, whether audio or video, altered by algorithms to replace the original subject with someone else, creating a convincing illusion of authenticity.

Radhakrishnan’s claim of falling victim to a deepfake has set a precedent in India’s cybercrime landscape.

The democratization of AI tools has made deepfakes accessible to a broader audience, raising concerns about the potential for such deceptive practices to become widespread.

But does this singular incident indicate the arrival of the “crime of the future”?

Can scammers so effortlessly deploy deepfake technology to orchestrate their schemes?

The Intriguing Case

What distinguishes this case is the use of deepfake technology during a video call.

As Beeraj Kunnummal, an officer at the cyber crime police station in Kozhikode, explained, the suspect strategically positioned their face close to the camera, enough to be recognized as a face but too obscure to be identified clearly.

Unclear video calls are not uncommon, and the voice uncannily resembled that of Radhakrishnan’s colleague; hence, satisfied with what he perceived as genuine interaction, Radhakrishnan transferred the funds.

What further puzzled him was the scammer’s familiarity with mutual friends, family photos shared on WhatsApp, and the remarkably convincing fake voice.

Authorities have traced the financial transaction to a suspect named Kaushal Shah, a 41-year-old individual with a history of involvement in multiple financial fraud cases.

Shah’s knack for assuming false identities and utilizing various mobile numbers and bank accounts has made tracking him challenging.

The Mechanics of Deepfake Crimes

The incident raises questions about the sophistication of deepfake technology and its potential for criminal use; while deepfakes can be convincing; however, experts assert that creating truly realistic deepfake videos is not as straightforward as it may seem.

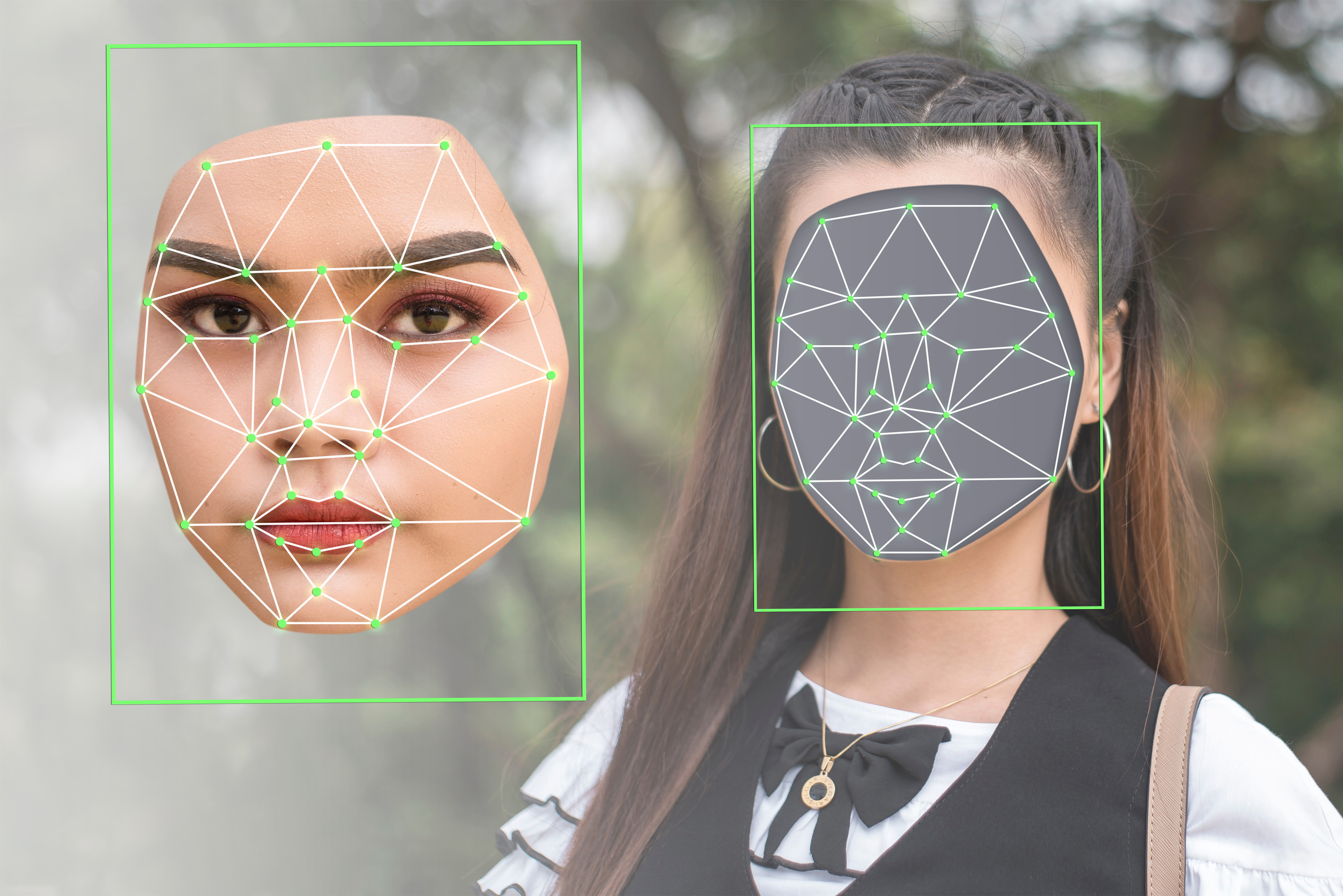

Sunny Nehra, founder of digital forensics platform Secure Your Hacks, emphasizes the demanding prerequisites for generating a convincing deepfake, including tens of thousands to hundreds of thousands of images and a substantial audio dataset of the targeted individual; the more high-quality images available, the more convincing the deepfake becomes.

In Radhakrishnan’s case, it is likely that the scammer employed an app or basic face-swapping techniques to manipulate a photograph and create a deceptive video.

Audio manipulation also requires a substantial amount of data and is influenced by the language used; therefore, while English may yield better results, Hindi, for instance, presents greater challenges without an extensive audio dataset.

Deepfake technology has enabled scammers to engage in various fraudulent activities, including identity theft, blackmail, political manipulation, and celebrity scams.

Individuals are advised to exercise caution online, refrain from trusting strangers too quickly, limit the sharing of personal data, and implement multi-factor authentication for online accounts to protect against such threats.

Global Concerns and Evolving Challenges

Deepfake technology has become a top concern on the global security agenda, with governments worldwide growing increasingly apprehensive about its implications.

Previous instances of deepfake-related fraud, including an audacious heist in Japan and a scam in China, have brought the potential for AI-enabled financial crimes to the fore.

As deepfake scams evolve, law enforcement agencies face formidable challenges; the technology’s increasing sophistication makes distinguishing between genuine content and deepfakes more difficult.

Tricksters can operate from anywhere worldwide, obscuring their digital footprints and leaving investigators chasing shadows, and the temporary nature of digital media complicates matters, as scammers can easily delete or hide incriminating evidence.

Moreover, the legal framework surrounding deepfakes remains a work in progress, often lacking clarity on defining criminal offences, and this ambiguity can discourage victims from reporting these crimes, creating an additional hurdle for law enforcement.

Addressing the Deepfake Conundrum

Efforts to combat deepfake scams are essential as technology races ahead of legislation; in the case of India, it lacks dedicated regulations for deepfakes, and existing legislation, such as the Digital Personal Data Protection Act 2023 (DPDPA 2023), applies in certain instances.

Experts suggest in order to effectively address the challenges posed by deepfakes, a holistic approach that encompasses fraud prevention, copyright protection, misinformation control, and defamation mitigation while supporting the positive aspects of disruptive technologies is needed; however, such an approach would require ongoing collaboration between governments, law enforcement, and technology experts.

As we counter a world where technology continually outpaces the law, vigilance remains paramount; it is imperative that law enforcement agencies stay vigilant against deepfake scams, which have the potential to disrupt elections, deceive individuals, and erode trust in the digital realm.

As the search for Kaushal Shah and others like him continues, the battle against deepfake deception persists, with its perpetrators lurking in the shadows, ready to execute their next deception.

The Last Bit, The Radhakrishnan P S case, is an example of how financial crimes are now being committed.

Deepfake technology, once a niche curiosity, has emerged as a potent tool in the hands of scammers and malefactors, and its potential for financial fraud, identity theft, misinformation, and manipulation is vast, and its ramifications reverberate on a global scale.

As law enforcement agencies race to adapt and develop countermeasures and governments steer the complex realm of regulation, one truth remains self-evident, vigilance in the digital age is paramount.